ChatGPT Health and AI Therapy Apps: Different Roles in Mental Health Support

- James Colley

- Jan 8

- 7 min read

A Changing Landscape for Mental Health Support

Artificial intelligence has quickly become woven into how people work, communicate, and increasingly, how they think about their mental health. In recent years, talking to an AI about stress, anxiety, or difficult feelings has moved from novelty to normal. For many, having an empathetic conversation—even in the form of text—feels accessible and low-barrier at a time when traditional support channels may feel out of reach. That cultural shift is significant: it reflects a growing willingness to engage with digital support and challenges long-standing stigma around emotional wellbeing.

As more people experiment with AI for emotional support, a natural question arises: if a general AI tool like ChatGPT can engage in thoughtful conversation about feelings and mental experiences, what role is left for specialised AI therapy platforms? On the surface, it may seem like they serve the same purpose. But once we look beneath the surface, it becomes clear that they serve different roles, with distinct responsibilities, designs, and implications for safety and long-term wellbeing.

Understanding these differences also helps answer another question: where do AI therapy apps fit on the spectrum of mental health support? That’s a question we’ve already begun to explore in depth in our pillar piece on the best AI therapy apps of 2025, where we assess how specialised tools are addressing the unique demands of ongoing mental health care.

Conversation and Care Are Not the Same Thing

The conversational abilities of modern AI are impressive. These systems can reflect emotional language, respond to distress with apparent empathy, and help users articulate thoughts that might be difficult to express otherwise. For someone who might not be ready to speak to another person, or who is seeking an initial outlet, that experience can feel supportive. There is real value in being able to express oneself without fear of judgement or interruption.

Yet a crucial distinction must be made here: a supportive conversation is not the same as therapeutic care. The impression of support in a single interaction should not be mistaken for actual progress. Care, in the clinical and behavioural sense, is not defined by how someone feels after one interaction; it is defined by what happens over time. Therapeutic support is longitudinal, not transactional. It involves patterns, structure, and guided progression. An empathetic response today does not equate to measurable improvement tomorrow.

This distinction matters precisely because the mental health field is not about isolated moments of understanding, but about ongoing change. Being heard is valuable, but it is only one piece of a much larger process.

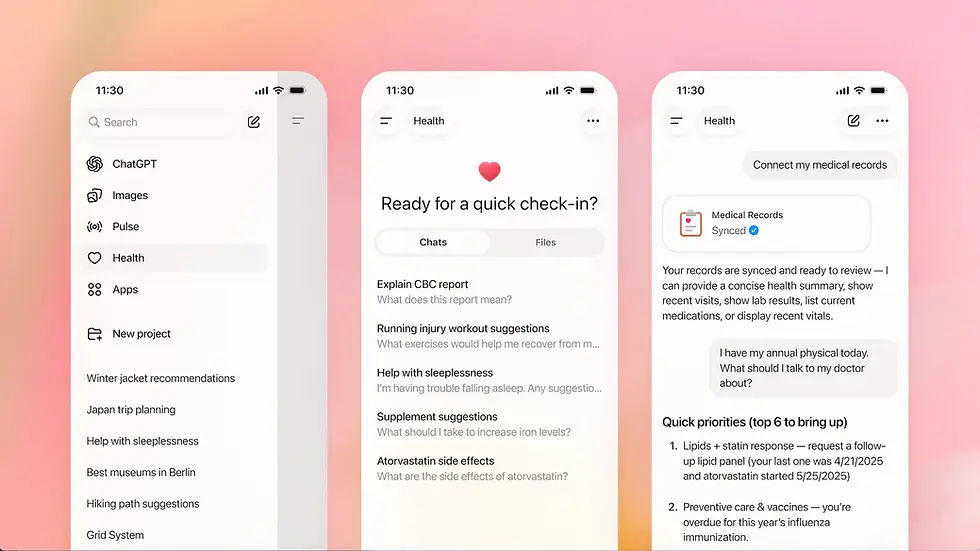

What ChatGPT Health Is Designed to Do

ChatGPT, including what some call “ChatGPT Health,” is a general-purpose AI system. Its strength lies in breadth and adaptability across domains, topics, and conversational contexts. It is not engineered to specialise in mental health care, and for reasons that go beyond capability.

Because it must serve a broad, global user base safely, it is intentionally conservative in areas where risk is high. In the context of mental health, this means avoiding responsibility for diagnosis, treatment, or sustained care. ChatGPT cannot be relied upon to manage someone’s emotional wellbeing over time, to recognise and adapt to changing risk levels, or to escalate safety concerns in ways that a dedicated system can. It also avoids creating dependency or positioning itself as a substitute for professional or structured support.

These constraints are not inherent shortcomings; they are deliberate design choices. A general AI operating at global scale cannot ethically or legally assume responsibility for an individual’s mental health outcomes, nor should it. Its purpose is to provide useful information and reflection, not to serve as the foundation of a care system.

Within these boundaries, ChatGPT can still play a meaningful role. It helps people think, reflect, and sometimes feel less alone in a moment of uncertainty. But it stops short of providing the type of structured, ongoing support that defines therapeutic intervention.

Mental Health Is a Longitudinal Problem

Mental health is not something that changes in isolated moments; it evolves over time, shaped by patterns of thought, behaviour, stressors, and supports. Genuine progress—whether in reducing anxiety, improving self-regulation, or strengthening resilience—is measured across weeks, months, and years, not within the span of a single conversation.

This means effective mental health support requires continuity. It requires systems that can hold context across time, recognise patterns that recur across sessions, and adapt support as needs evolve. For individuals navigating ongoing challenges—whether chronic stress, mood disorders, or adjustment difficulties—this continuity is not optional, it is essential.

It is precisely this longitudinal dimension that specialised AI therapy apps are designed to address. Unlike general conversational tools, these platforms are built to hold and interpret patterns over time, to integrate evidence-based frameworks, and to support users on a sustained path of reflection and growth.

The Role of AI Therapy Apps

AI therapy apps occupy a purpose-built space in the ecosystem of digital mental health. These systems are designed not simply to respond well in a single moment, but to support meaningful, measurable engagement over time. Their architecture reflects this commitment to continuity, structure, and safety.

Where general AI avoids responsibility by design, AI therapy apps are explicit about what they can do, where their limits lie, and how they handle safety concerns. They integrate evidence-based methods like cognitive behavioural frameworks, reflective journaling, and progression tracking to help users build skills and insight beyond a single conversation. This is not the same as providing a better or more advanced chatbot; it is the intentional design of a system that supports care rather than conversation alone.

If you are interested in understanding how different AI therapy platforms are implementing these elements in practice, our best AI therapy apps of 2025 piece explores a range of approaches and real-world tradeoffs across the category.

Regulation, Responsibility, and Trust

Mental health is regulated because the stakes are high. When support goes wrong, the consequences are not merely uncomfortable—they can be harmful. Accountability, clarity of scope, and well-defined pathways for escalation are critical, not optional.

Large general AI platforms are structurally incentivised to minimise exposure in high-liability domains. They must remain non-committal and avoid overreach. Dedicated AI therapy apps, on the other hand, build responsibility into their design. They operate with clear disclaimers, safety protocols, and explicit definitions of what they are offering and what they are not. This is not red tape; it is trust infrastructure. It is what allows people, organisations, and clinicians to engage with these systems in a way that is transparent and accountable.

Understanding these distinctions helps organisations and users make informed decisions about where and how to integrate digital support into lives and systems.

Why the Difference Matters in the Workplace

The divergence between general AI and AI therapy apps becomes even clearer when viewed through the lens of organisational responsibility. Employers, insurers, and governance bodies do not think in terms of novelty or convenience. They think in terms of duty of care. Supporting the mental health of employees is not a feature to be toggled on or off; it is an obligation that carries legal, ethical, and human risk management implications.

No organisation will rely on a general conversational AI to fulfil that role, because doing so would create ambiguity around responsibility, escalation, and measurable outcomes. Instead, they look to platforms that are explicitly designed for mental health support, with defined boundaries, accountability mechanisms, and governance structures. This is why enterprises adopt systems, not tools.

For readers who want a deeper comparative lens on the variety of specialised mental health platforms available today—and what differentiates them—our best AI therapy apps of 2025 article serves as an important companion to this discussion.

Complementary Roles, Not Competition

Viewed through this lens, ChatGPT Health and AI therapy apps are not competing for the same role; they are complementary. ChatGPT’s impact on the mental health landscape may be cultural as much as technical. It has helped normalise conversations about emotions with AI and made talking about stress and mental load feel more approachable. What once seemed uncomfortable feels ordinary to many people today. That shift reduces stigma and can lower the barrier to seeking support in structured ways.

General AI opens the door. AI therapy apps provide the structure on the other side. The value of each depends on where someone is in their journey. General AI can help people articulate experiences and explore context. Therapy platforms are where the work of sustained support happens.

Looking Ahead

The real risk in AI and mental health is not that general AI will replace purpose-built therapy systems. The risk is confusing surface similarities with functional equivalence—mistaking conversation for care, empathy for treatment, and accessibility for accountability. Mental health support requires continuity, contextual memory, boundary design, and responsible escalation. Anything less eventually erodes trust, and trust is the currency of care.

The future of mental health support will be layered and nuanced. General AI will help people explore and articulate their experiences. AI therapy apps will provide structured, ongoing engagement. Human clinicians will continue to focus on the most complex, acute, or high-risk cases. Employers will adopt systems that meet ethical, legal, and human requirements, not improvisational tools.

This is not about choosing one over another. It is about recognising that mental health is a multidimensional problem that requires a differentiated ecosystem of solutions.

Conclusion

As AI becomes more deeply embedded in how people seek and receive support, clarity about roles, responsibilities, and design intentions will matter more than ever. ChatGPT Health and AI therapy apps address different needs within the broader landscape of mental wellbeing. Understanding those differences—and linking them to practical choices about support pathways—is essential for individuals, organisations, and clinicians alike.

For readers seeking an in-depth look at how current AI therapy platforms approach this work, and how they compare with one another, be sure to explore our companion piece on the best AI therapy apps of 2025.

Comments